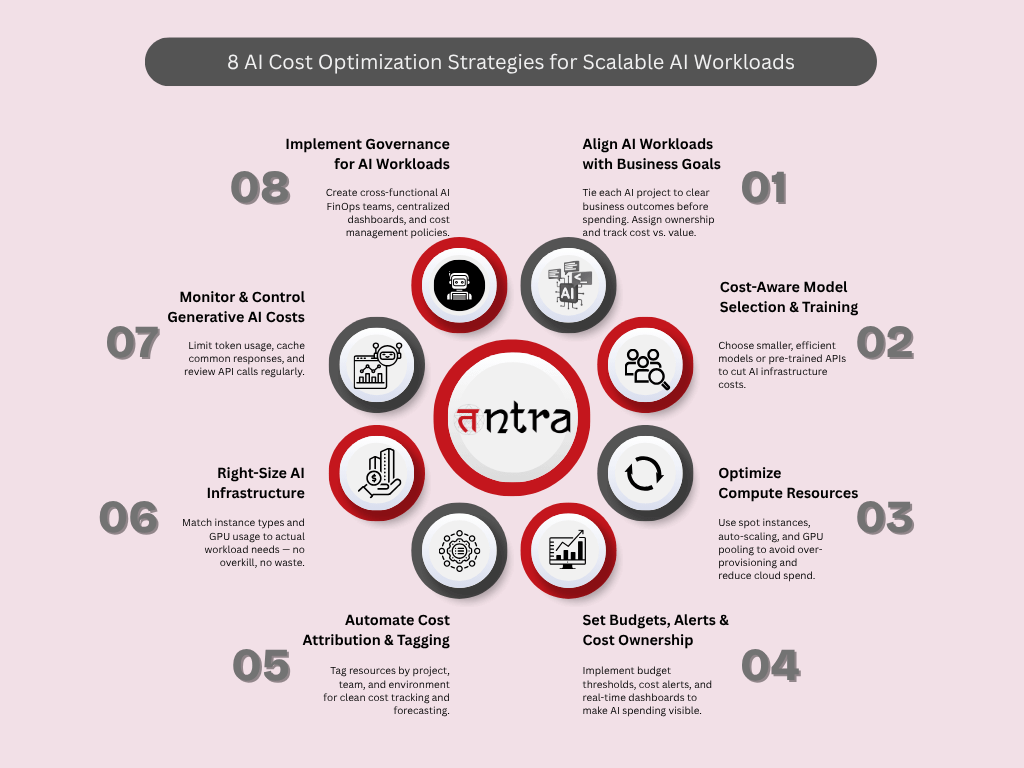

FinOps for AI: 8 Cost Optimization Strategies to Scale AI Workloads Efficiently

Table of Contents

ToggleAI workloads are resource-heavy, fast growing, and financially complex. That’s where FinOps for AI steps in — bringing clarity, control, and accountability to cloud financial operations for AI spending. This article breaks down eight essential strategies, from cost-aware model selection to governance for generative AI cost control. Learn how to align costs with outcomes, right-size infrastructure, and avoid common pitfalls. Whether you’re scaling AI across teams or just starting out, these insights will help you stay efficient, innovative, and future-ready. At Tntra, our expertise in fintech software development and fintech practice ensures AI and financial systems are implemented with scalability and cost efficiency in mind. Continue reading to learn more.

What is FinOps for AI and How It Enables AI Cloud Cost Management

Artificial Intelligence is no longer the stuff of R&D labs or Silicon Valley buzz. It’s now at the core of everything, from customer support and fraud detection to drug discovery and real-time personalization. But with great power comes a not-so-glamorous reality: massive, complex, and often unpredictable costs.

If you’re leading AI initiatives at scale, you’ve likely asked yourself:

“Why is our AI bill growing faster than our results?”

“Are we spending wisely on infrastructure?”

“How do we keep control without slowing innovation?”

Welcome to the world of FinOps for AI, the discipline of bringing financial accountability to the complex and resource-hungry realm of cloud-based AI.

In this article, we’ll break down the top 8 AI cost optimization strategies that leading companies adopt to make AI sustainable, scalable, and accountable. These aren’t vague tips — they’re actionable strategies you can use to govern, manage, and optimize AI workloads in the real world.

1. Align AI Workloads with Business Objectives (Before Spending a Dollar)

The first principle of FinOps for AI? Don’t spend before you align.

Too many teams jump into training models, running experiments, or buying GPUs without clearly tying workloads to business value. This leads to AI projects with unclear ROI and runaway budgets.

Your FinOps strategy should start with clarity:

- What problem is this AI model solving?

- How does success look (accuracy, latency, revenue impact)?

- What’s the budget, and who owns it?

Before you train, fine-tune, or deploy, tie the initiative to a business outcome. This helps prevent waste and gives you a lens to evaluate cost vs. value at every stage. For example, in our Risk Mitigation Services Case Study, aligning technology adoption with financial outcomes was key to reducing exposure and improving ROI.

FinOps best practice: Assign a “business owner” to every AI workload and track success metrics alongside cost metrics.

2. Use Cost-Aware Model Selection and Training

Not every model needs to be a 70-billion-parameter transformer. And not every task needs fine-tuning from scratch.

Model selection is where AI cost optimization begins.

Instead of defaulting to the most powerful model:

- Consider smaller open-source models that require fewer resources

- Explore transfer learning or fine-tuning on smaller subsets

- Use pre-trained APIs for tasks like text classification or image tagging

Training costs can vary by orders of magnitude depending on architecture, data size, and hyperparameters. A thoughtful approach to model design can cut your AI infrastructure costs by 50–90%.

FinOps for cloud AI tip: Incorporate cost simulations or budget forecasts into your model selection process.

3. Optimize Compute for AI Workloads: Spot Instances & Auto-scaling

When it comes to AI workloads, compute is king — and also the biggest cost driver.

That’s why smart teams use spot instances, reserved capacity, and auto-scaling policies to stretch their budget.

- Spot instances (e.g. AWS EC2 Spot, Azure Spot VMs) offer up to 90% discounts, but require smart job scheduling

- Auto-scaling ensures you’re not over-provisioned during idle times

- GPU pooling across teams reduces duplication and idle time

Managing compute efficiently is one of the core pillars of AI FinOps strategies. It transforms your infrastructure from “always-on and overkill” to “just-enough and cost-smart.”

FinOps best practice for AI: Use workload-aware orchestration tools like Kubernetes, Ray, or MosaicML to manage dynamic compute needs.

4. Set Clear Budgets, Alerts, and Cost Ownership

AI projects often lack the cost guardrails that traditional software teams have. That ends here.

Implement budget thresholds, cost anomaly alerts, and real-time dashboards for all major AI workflows — from training jobs to inference APIs. A similar cost-visibility approach helped a financial institution boost lead generation in our Credit Union Growth with Salesforce FSC Case Study:

- Training jobs

- Inference APIs

- Data pipeline usage

- GPU/TPU cluster consumption

Pro tip: Use tools like AWS Budgets, GCP Billing, Azure Cost Management, or third-party FinOps platforms like CloudHealth or Apptio.

5. Automate Cost Attribution and Tagging

You can’t manage what you can’t see. And in AI, visibility is everything.

Use automated tagging frameworks to label resources by:

- Project

- Team

- Model type

- Environment (dev/test/prod)

- Business unit

This allows for clean cost attribution, easier forecasting, and more accountability across the org. Without tagging, AI becomes a black box on your cloud bill.

AI cloud cost management tip: Enforce tagging via IaC (Infrastructure as Code) policies using Terraform or Pulumi.

By doing this, you bolster AI workload cost forecasting with FinOps and clear visibility into AI resource allocation.

6. Right-Size Your AI Infrastructure

It’s tempting to go big — more GPUs, more memory, more nodes. But right-sizing is your secret weapon.

Not all AI workloads need NVIDIA A100s or top-tier TPUs. In fact:

- Many training jobs run fine on lower-tier instances with longer training time

- Batch inference can be parallelized across cheaper CPUs or mixed compute types

- Memory usage can often be optimized through batching and gradient checkpointing

Right-sizing ensures your AI model cost management is tightly matched to workload needs, not vanity specs.

FinOps for AI insight: Periodically audit instance usage vs. actual performance benchmarks to identify overkill.

Through this you achieve AI infrastructure cost optimization.

7. Monitor and Control Generative AI Costs

Generative AI costs, from LLM APIs to custom foundation model training, can spiral fast. o control them, organizations can apply token-aware patterns and caching. This mirrors lessons learned in our Cashless Payments CBDC Case Study, where cost-efficient transaction scaling was crucial.

Why? Because every prompt, every completion, and every token has a price. And if you’re running GenAI at scale across your products, it adds up quickly.

To control generative AI costs:

- Use token-aware UI patterns to reduce waste (e.g. prompt previews, query limits)

- Cache common responses when appropriate

- Evaluate prompt engineering to reduce token usage while maintaining quality

- Regularly review API call logs for misuse or overuse

Generative AI cost control tactic: Set hard and soft usage limits by user/team/application, and revisit weekly.

This is especially important in FinOps for machine learning (ML) and Cloud-native FinOps for AI settings.

8. Build a Governance Layer for AI Workload Management

Cost control without governance is chaos. That’s why scalable AI operations require a FinOps-aligned governance model.

This includes:

- A cross-functional AI FinOps task force (finance, engineering, product)

- Quarterly cost reviews by workload and team

- SLA-based cost vs. performance tracking

- Centralized dashboards for AI workload governance

- Playbooks for provisioning, approval, and scaling policies

Governance isn’t red tape, it’s your defense against surprise cloud bills and shadow AI projects that derail budgets.

Cloud spend management insight: Create an “AI Cost Council” that owns policies, reviews decisions, and evolves best practices.

Real-World AI Implementation Challenges, and How FinOps Solves Them

Let’s be real. Most teams face these AI implementation challenges:

- Data scientists unaware of cost implications

- Over-provisioned compute clusters

- Limited visibility into usage vs. outcomes

- Friction between finance and engineering

This is exactly where FinOps for cloud AI thrives. It bridges the gap. It gives teams a shared language of cost, performance, and value.

Whether you’re running GenAI apps or real-time AI pipelines, FinOps creates a culture of shared accountability that scales.

Quick Checklist: FinOps Best Practices for AI

Here’s a snapshot you can share with your team:

| Strategy | Action Item |

|---|---|

| Business Alignment | Tie each AI workload to clear business goals |

| Model Selection | Choose cost-effective models or APIs |

| Compute Optimization | Use spot instances and auto-scaling |

| Budgeting & Alerts | Set budgets and real-time alerts |

| Cost Attribution | Tag and track resources by project |

| Right-Sizing | Match instance specs to actual needs |

| GenAI Monitoring | Control token usage and API calls |

| Governance | Create an AI FinOps task force and policies |

The Future of AI is Scalable, And So is Your Budget

AI is evolving fast. Your business probably is too.

But no matter how advanced your models get, cost will always be part of the equation. That’s why now is the time to embrace cloud financial operations for AI — not as a compliance function, but as a competitive advantage.

With the right FinOps best practices for AI, you can scale smarter, innovate faster, and keep your CFO smiling.

So whether you’re leading AI at a tech startup, a large enterprise, or a regulated institution, the message is the same:

Make FinOps your AI co-pilot. You’ll thank yourself later.

Final Takeaways

- FinOps for AI isn’t about cutting corners; it’s about spending with purpose.

- AI cost optimization is a shared responsibility across tech, product, and finance.

- Tools, dashboards, and playbooks are helpful, but culture is what makes FinOps stick.

- Start small. Align goals. Measure outcomes. Iterate continuously.

- AI isn’t cheap, but it can be sustainable.

Ready to optimize your AI future? Schedule a call with our AI experts today — we can help you integrate FinOps strategies into your AI roadmap. Book a Consultation

FAQs

What is FinOps in simple terms?

FinOps is a way for engineering, finance, and business teams to work together and manage cloud costs more effectively. It’s about spending smarter, not just spending less.

What is FinOps for AI?

FinOps for AI applies cloud financial operations to AI workloads. It helps teams manage and optimize the cost of training, deploying, and scaling AI models in the cloud.

Why is FinOps important for AI workloads?

AI workloads can rack up cloud costs fast especially with GPUs, data storage, and inference. FinOps ensures spending aligns with business value and doesn’t spiral out of control.

Can small businesses benefit from FinOps?

Absolutely. Even small AI teams can waste money without clear visibility or cost control. FinOps brings structure and accountability helping startups and SMBs get more value from every dollar.

What are the main challenges in AI cost optimization?

The biggest challenges include unpredictable workloads, over-provisioned infrastructure, lack of cost visibility, and runaway generative AI usage. FinOps helps tackle all of these with strategy and shared ownership.